Blog The Cobra Effect in digital projects

If the right goals aren’t set at the beginning, it can have a disastrous impact on your product. This history lesson warns us of the consequences.

It’s the late 19th century, and India is under British rule. Colonists flock to the new territory of Delhi, and with them goes their government’s influence.

Now as it happens, British expats aren’t too keen on Delhi’s cobra population. In fact, the prospect of a venomous snake hiding your gutter is a rather terrifying one. So the government comes up with a plan. Aiming to eliminate the slithering menace, they decree a bounty on cobras. They say they’ll pay a financial reward for each dead snake.

The initiative’s success is measured directly by the number of snakes killed. What could go wrong?

Well, the savvy people of Delhi see an opportunity. They set upon breeding more snakes. Producing an ample supply of cobras means that they can continue cashing in the bounty, and reaping the rewards. Paradoxically, they are incentivised to do this by the government’s own goal.

When the British find out, the bounty is naturally removed. In response the breeders release their (now worthless) Cobras into the wild, and the snake population surges.

In spite of efforts to solve the problem, it’s actually gotten worse.

A warning from history

This anecdote teaches us an important lesson. When we set out to do something, we should first think carefully about what our goals are, and how we’ll measure them. The British government’s goal should have been to eliminate the Cobra population, but instead they focused only on ’number of dead cobras’. These are two very different things, and the mistake led to unfortunate consequences.

When setting goals, teams often fail to think about second-order effects. As soon as you set a goal, you begin to influence decision-making. After all, people will align their own actions towards meeting them. If we’re not careful in setting the right goals, it can cause undesirable behaviours that are contrary to outcomes we wanted in the first place.

Examples of this occur throughout history, but we also witness them in our day-to-day work as digital professionals. If goals aren’t set and measured in the right way from the very start, it can have a negative impact on your product.

Beware of misleading KPIs

In our work at Pixel Fridge, we often see organisations setting unsuitable performance indicators (KPIs) for their websites and apps. These tend to be the default display metrics in whatever analytics tool they are using. Usually things like :

- Average number of page views per session.

- Average session time.

- Website bounce rate.

- Number of sign-ups.

People often hold to the idea that the bigger these numbers, the better. There’s a myth that these simple metrics translate directly into how well a product is doing. After all, we’re conditioned by our corporate environments to see an upward-trending graph and think “that looks impressive”.

A high website ‘bounce rate’ (the proportion of visitors who land on the site, and leave without visiting another page) is often called out as an inherently bad thing. There’s an ingrained belief in some organisations that we should always strive to bring bounce rates down. But if people are getting exactly what they need, and the page is meeting its objectives…. is it really a big deal?

The wrong goals can hurt the user experience

Working with a large multinational company, we once witnessed the damage that unsuitable KPIs can cause. Up until recently, the company had been measuring the success of their websites purely based on a low bounce rate, and a high number of page views per-session. There was no context here. There was no consideration for the user experience.

The company had lost sight of what these websites were actually supposed to achieve. Design ‘enhancements’ became focused on manipulating these usage metrics. This led to misguided requests such as adding a welcome screen to bring down the bounce rate, or splitting information over many short pages to increase page views. When we conducted usability testing, it was clear that users found these design decisions annoying. It made the sites less enjoyable, and as a result they would be less inclined to use or recommend them.

By setting the wrong goals, the company had set out on a path of making design decisions that actively worked against the user.

Understand first, measure second

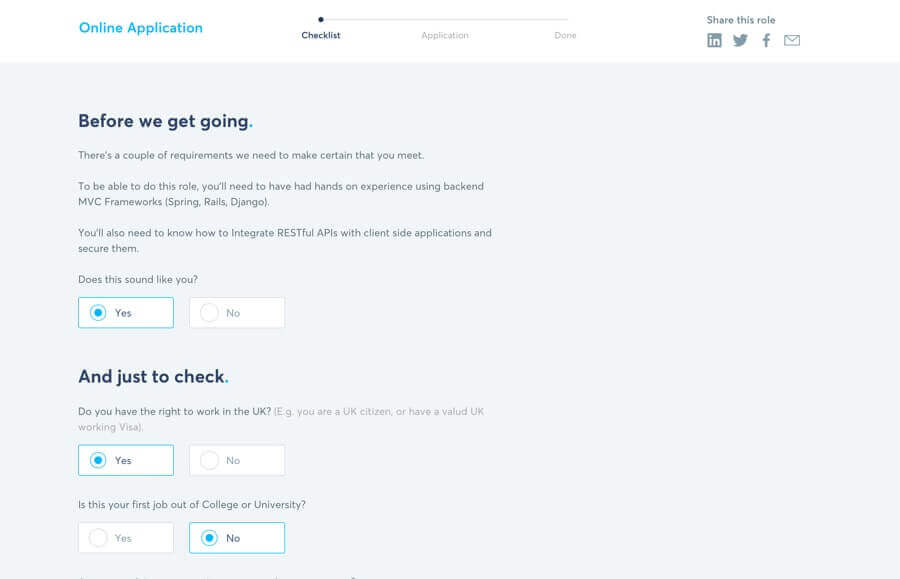

When creating a new website or app, always begin with agreeing user and business requirements. The whole team needs to be on the same page with this. When we’re all clear about why people are using our product, goals can be framed in a sensible way.

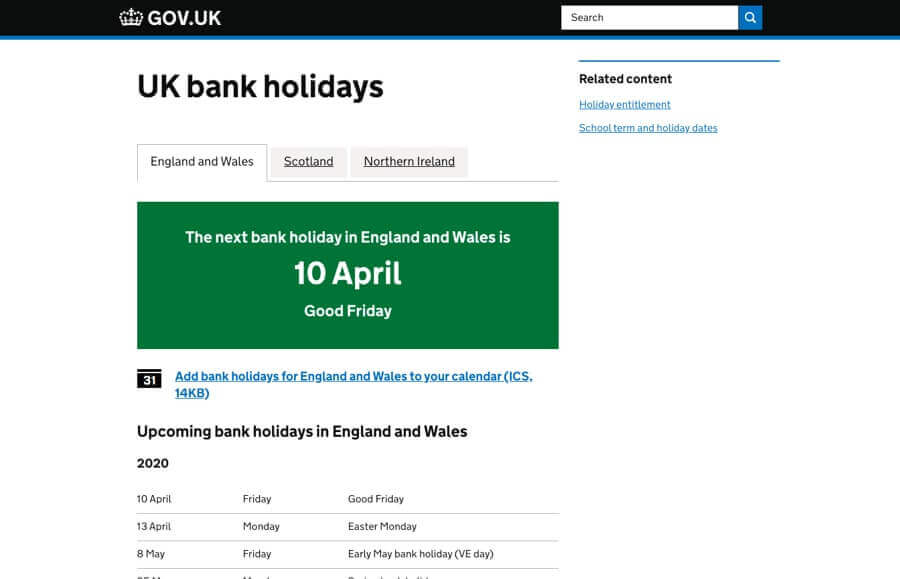

Let’s take an example. Imagine we’re designing a site to provide important public service information. People need to get this information as quickly and efficiently as possible. That’s our focus, our goal.

But how do we measure success? You may contemplate average session length as a good way to track this. But what does that really tell us?

A longer visit duration may tell us that people are really engaged, and that they’re taking the time to read all of the content carefully…. But then again, it could also show that there’s friction. People might be spending longer than necessary trying to find what they need. Whatever the case, it’s impossible to say definitively whether this is a good or bad outcome.

Tracking performance metrics can be interesting, but in this scenario it isn’t a suitable measure of our success. Instead, we should look to other ways of measuring the goal. We might try thinking about :

- Logging the number of email help requests before and after the launch.

- Running user testing sessions, and measuring success ratings.

- Adding a simple ‘did this answer your question?’ survey at the bottom of the page.

These are just a few simple ideas. Often, it takes a combination of different quantitative and qualitative measurements to build a full picture of how well we’re doing. To define these measurements, collaboration is always the best approach.

By working in parallel with subject matter experts, you can make sure goals are articulated in the right way and that appropriate measures are set to track them.

The moral of this story

The ‘Cobra Effect’ happens when we lose sight of the product’s original purpose. When we lose context, and become more fixated on arbitrary performance metrics than the reason something exists in the first place.

By taking the time to set clear goals, we can avoid the unintended consequences of this phenomenon. What’s most important is that the goals we set always link back to an actual user or business need.

By keeping things focused in this way, we make sure that websites and apps stay useful, usable, and true to their original purpose.

Chris Myhill

— Co-Founder